NVIDIA Issues Statement on GeForce GTX 970 Memory Allocation Issue #Ramgate

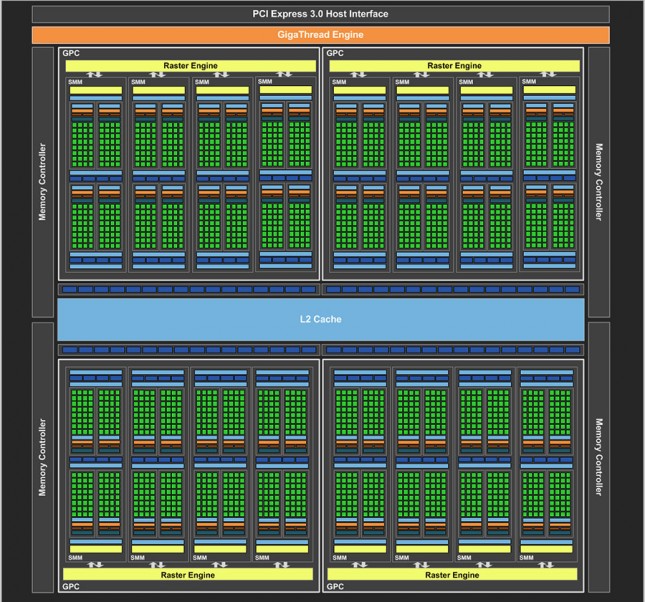

The NVIDIA GeForce GTX 980 video card uses the full GM204 Maxwell GPU that contains four graphics processing clusters (GPC). Each GPC houses four SMM units and the number of CUDA cores per SMM is 128, so there are 512 CUDA cores per GPC. That means the fully enabled GM204 GPU has four GPCs and 2,048 CUDA cores. The NVIDIA GeForce GTX 970 features a partially disabled GM204 GPU and as a result it has 13 SMM units instead of the 16 SMMs on the GeForce GTX 980. With just 13 SMMs enabled it means that there are 1,664 functional CUDA cores. The practice of cutting down a full GPU to be used in less power cards is a practice that AMD and NVIDIA have done for years and to be honest it usually doesn’t have any unknown or hidden side effects.

It has now come to light in recent weeks and months that the GeForce GTX GTX 970 has reported performance issues when using more than 3.5GB of VRAM. The community believes that by disabling three SMMs on the GM204 -200 GPU used on the GeForce GTX 970 that memory subsystem is crippled and therefore there is a fairly significant and noticeable performance penalty for using that last 0.5GB of VRAM. It appears this was something NVIDIA was aware of in the design phase of the GeForce GTX 970 and they the purposely segmented the graphics memory into the 3.5GB and 0.5GB segments. NVIDIA released the following statement to help calm the concerns that some have on the GeForce GTX 970 and way the memory is operating.

The GeForce GTX 970 is equipped with 4GB of dedicated graphics memory. However the 970 has a different configuration of SMs than the 980, and fewer crossbar resources to the memory system. To optimally manage memory traffic in this configuration, we segment graphics memory into a 3.5GB section and a 0.5GB section. The GPU has higher priority access to the 3.5GB section. When a game needs less than 3.5GB of video memory per draw command then it will only access the first partition, and 3rd party applications that measure memory usage will report 3.5GB of memory in use on GTX 970, but may report more for GTX 980 if there is more memory used by other commands. When a game requires more than 3.5GB of memory then we use both segments.

We understand there have been some questions about how the GTX 970 will perform when it accesses the 0.5GB memory segment. The best way to test that is to look at game performance. Compare a GTX 980 to a 970 on a game that uses less than 3.5GB. Then turn up the settings so the game needs more than 3.5GB and compare 980 and 970 performance again.

Heres an example of some performance data:

GTX980 GTX970 Shadows of Mordor <3.5GB setting = 2688×1512 Very High 72fps 60fps >3.5GB setting = 3456×1944 55fps (-24%) 45fps (-25%) Battlefield 4 <3.5GB setting = 3840×2160 2xMSAA 36fps 30fps >3.5GB setting = 3840×2160 135% res 19fps (-47%) 15fps (-50%) Call of Duty: Advanced Warfare <3.5GB setting = 3840×2160 FSMAA T2x

Supersampling off82fps 71fps >3.5GB setting = 3840×2160 FSMAA T2x

Supersampling on48fps (-41%) 40fps (-44%) On GTX 980, Shadows of Mordor drops about 24% on GTX 980 and 25% on GTX 970, a 1% difference. On Battlefield 4, the drop is 47% on GTX 980 and 50% on GTX 970, a 3% difference. On CoD: AW, the drop is 41% on GTX 980 and 44% on GTX 970, a 3% difference. As you can see, there is very little change in the performance of the GTX 970 relative to GTX 980 on these games when it is using the 0.5GB segment.

Basically, NVIDIA has tested this concern in-depth and has found that there is at most a 3% performance hit when using greater 3.5GB of memory. Many in the community are saying that the don’t trust NVIDIA’s numbers and that they don’t care about averages. Some are using the hashtag #Ramgate to talk about the issue on Twitter and some are wanting NVIDIA to make public the memory bandwidth on the 0.5GB memory segment. Others are thinking that this will end up being the biggest non-issue ever.

We’ll let you know if more comes up on the situation! If you have any questions or concerns please leave them in the comment section below. LR will forward these questions to NVIDIA to try to get answers for you.