NVIDIA GeForce GTX 680 Video Card Review

NVIDIA GPU Boost, Adaptive VSync & TXAA

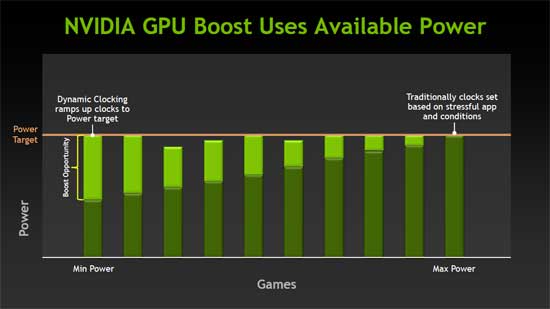

Among the other features that NVIDIA is launching with the GeForce GTX 680, they are also introducing GPU Boost. If you have been following the processor scene over the last year or so, both Intel and AMD have been implementing their own version of Turbo into their processors. Intel introduced it with the launch of ‘Sandy Bridge’ and AMD with the Phenom II X6 processors and further pushed the envelope with the FX ‘Bulldozer’ series. Each of these will increase the clock speed of the processor cores to a predetermined level depending on usage. NVIDIA’s GPU Boost works in a very similar fashion, though it’s not being done with multipliers, it is strictly the frequency.

takes into consideration when dynamically setting both the frequency and

voltage on the GPU and Memory.

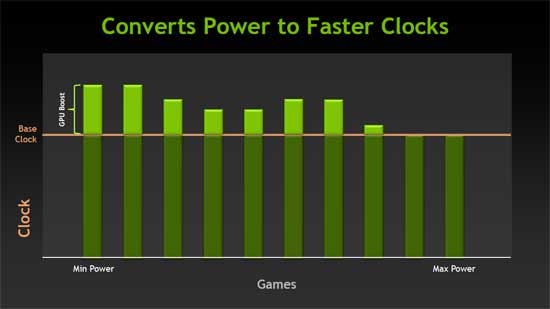

It is much more dynamic, there is no predetermine level that the

GPU core will be raised to. The key to the NVIDIA GPU Boost it the new

Kepler architecture has a TDP of only 195 Watts, but not all 3D

applications will bring the card to that level. Starting with the base

GPU clock of 1006MHz the NVIDIA GeForce GTX 680 will run that frequency

as a minimum speed in 3D applications that bring the Kepler core to the

TDP limit. Now here’s where things start to get tricky. If you’re

running a 3D application that isn’t bringing the GTX 680 up to TDP, the

clock speed will dynamically increase to the predetermined power level!

The key to this process is the TDP that the GeForce GTX 680 is currently

running at. If it is running at 180 Watts you will get a smaller boost

than if you are running at only 160 Watts. GPU Boost will increase the

GPU frequency to bring the TDP up to the set maximum. One of the great

aspects about the GPU Boost is that much like the processor Turbo Boost

technologies, NVIDIA’s GPU boost all happens seamlessly in the

background with no intervention by the user!

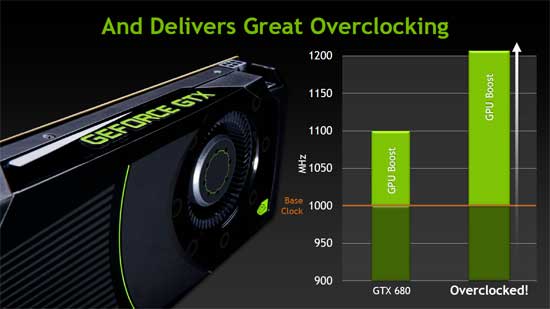

An advantage to NVIDIA GPU Boost over the way the mentioned processors run turbo, is that it works with overclocking. With processors you can increase the turbo, the base multiplier, or both on some motherboards. NVIDIA GPU Boost allows you to overclock the GPU, and the GPU Boost will push the GTX 680 further yet! It will be interesting to see how this will pan out for the overclockers that like to truly push their GeForce GTX 680’s while using liquid nitrogen. We have been told that the GTX 680 is going to be a very friendly card to sub-zero cooling! I am definitely looking forward to seeing these cards under some liquid nitrogen and other extreme cooling in the future!

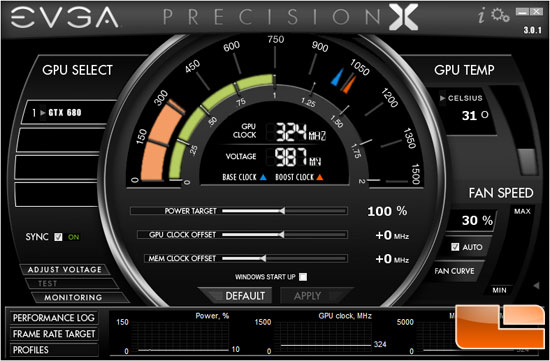

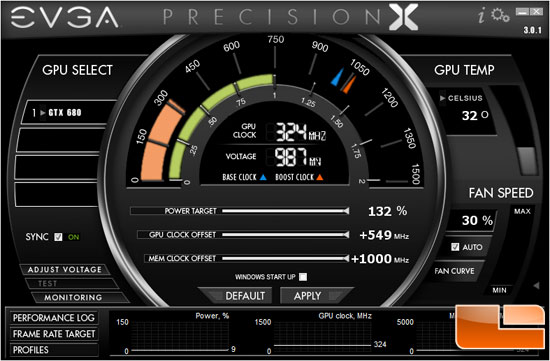

In the latest version of EVGA’s Presicion X software we can tweak the settings for the GPU Boost. More specifically the Power target, or TDP of the GeForce GTX 680 can be increased. This should allow more headroom for the GPU Boost to work. Though out of the box NVIDIA found that the median GPU Boost or ‘Boost Clock’ (average clock frequency the GPU will run under non-TDP apps) is 1058MHz which is just a hair over 5%. According to NVIDIA though, many of the non-TDP applications that they ran during internal testing saw GPU Boost take the GeForce GTX 680 to 1.1GHz or higher!

Taking a look quick look at the options that we can adjust in EVGA Precision X, we can adjust the Power Target as we mentioned above, the GPU Clock Offset, and the Memory Clock Offset. We can take the Power Target to 132% of the standard TDP, that’s 225 Watts TDP an increase of 30 Watts (The most this card can pull at board-level is 225 watts 75W for each 6-pin, plus mobo PCIe connector 75W = 225W.) So, NVIDIA allows people to raise to max board power without violating the PCIe specification. The GPU Clock Offset can slide up another 549MHz, at least in EVGA Precision we’ll play with that more on the overclocking page later in the article. The Memory Offset can go up another 1000MHz.

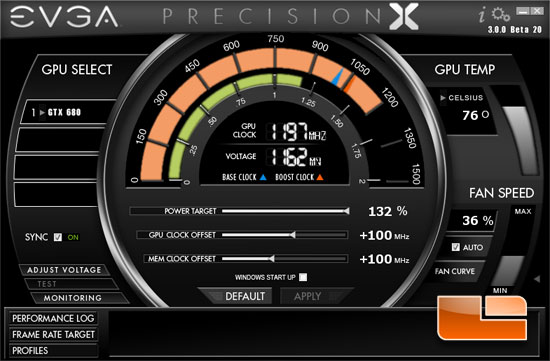

Just toying with the settings at this point, perhaps we will call it a little teaser for our overclocking section. We slid the power target to the maximum 132% and bumped the GPU clock and memory clocks each up 100MHz and opened up Heaven 3.0. Prior to the temperature hitting the point that you can see above (76 degrees Celsius) the GPU Boost topped out at 1215MHz! That is more than 200MHz above the stock settings! We can see in the screenshot that although the frequency dropped from 1215MHz we are still cooking along at 1197MHz. I’m betting we can get some more out of it when we sit down for an overclocking session with it, but we’ll just have to wait and see though.

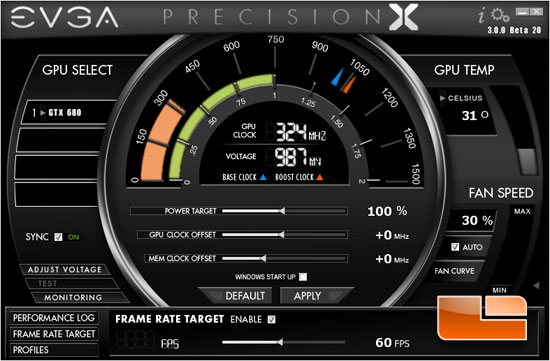

You can also set a frame rate target with EVGA Precision, which is essentially a frame rate limiter. If you set an FPS target, (you need to restart application after this) the app will be locked to that max FPS. The cool thing with the GTX 680 is that the card will lower the clockspeed to match the FPS. So its good for older games that run at like 200FPS, you can set a frame cap at 100FPS and the card will lower clocks/voltage/power to maintain 100FPS and not waste power/heat and reduce tearing.

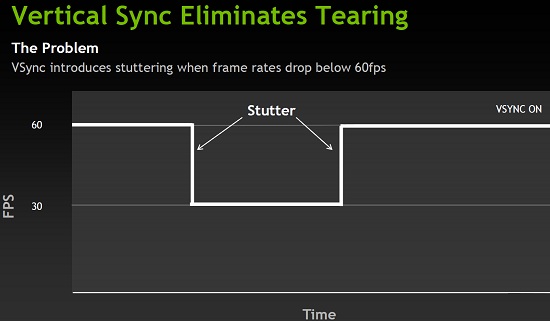

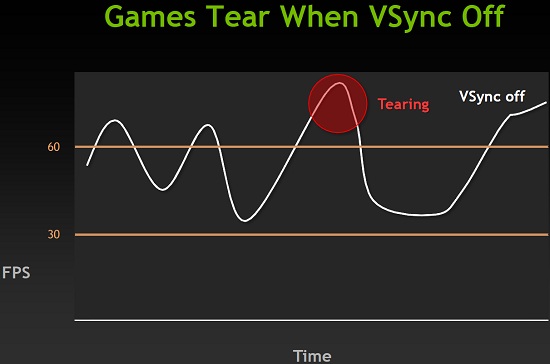

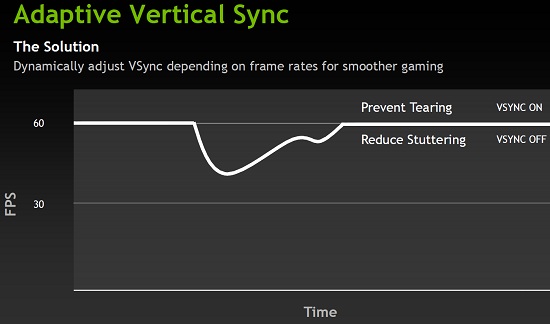

NVIDIA has also come up with something new called Adaptive Vsync which dynamically toggles off Vsync only when the frame rate drops below the display’s refresh rate. All gamers should know that Vsync prevents you from running games at over 60 frames per second. Vsync is great when your video card is capable of generating a ton of high frame rates, but not so good when you dip under the 60 FPS mark as you get stutters when this happens. Once you go below 60FPS Vsync then targets your frame rates at 30FPS, then 20FPS and so on if your frame rate is that low. When Vsync takes you from 60FPS to 30FPS it’s not a smooth transition and that is why you can visually see the change in the form of a small stutter or hesitation.

One of the solutions to this is to turn Vsync off for smoother frame rates, but then you run into the problem of going too fast and then you find yourself having tearing when a quick drop in the frame rate happens.

What adaptive Vsync does is intelligently adjust the behavior. When the frame rates are high the 60 FPS limit will be in place, but when it drops below 60 FPS it will go back to the native behavior of the application or game. This technology uses whatever the native refresh rate is of the monitor, so it will be 60 on some and 120 on others. To simplify things, this technology caps it above 60 FPS and keeps it free flowing below 60 FPS.

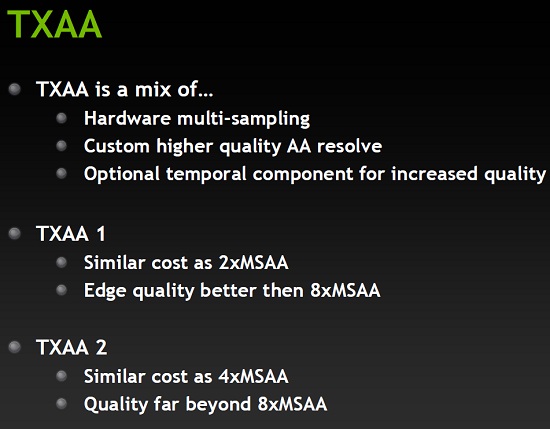

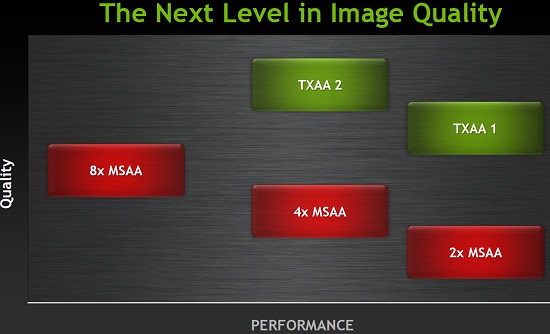

NVIDIA’s new Temporal Anti-Aliasing (TXAA) feature is also worth mentioning as it is going to make your game look better and you’ll take less of a performance hit for using it. For example it is said to provide 16x MSAA quality at the cost of 2x-4x MSAA without any blurred effects. TXAA is a mix of hardware anti-aliasing, custom CG film style AA resolve, and in the case of 2xTXAA an optional temporal component for better image quality. It’s also a feature that will be added the GeForce GTX 500 series cards with a driver release at a later date, so this will also be available to current owners. TXAA will first be implemented in upcoming game titles shipping later this year.

TXAA is available with two modes: TXAA 1, and TXAA 2. TXAA 1 offers better than 8xMSAA visual quality with the performance hit of 2xMSAA, while TXAA 2 offers even better image quality than TXAA 1, but with performance comparable to 4xMSAA.

Here is a quick video that covers NVIDIA GPU Boost, TXAA, PhysX and Adaptive VSync.

Comments are closed.