Acer Predator XB1 (XB271HU) WQHD (2560×1440) 144 Hz G-Sync Monitor Review

Predator XB271HU: Gaming & Power Consumption

Out of the box I thought the color accuracy was pretty good, but I did adjust some settings to give me what I feel is a more accurate palate and whiter whites. I do not own any hardware calibration devices or other testing devices, so I could not measure with tools. The only settings I adjusted again were the Brightness (65), R Gain (99), B Gain (93), G Gain (97) while everything else stayed default.

So what does the refresh rate and frame rate have to do with each other? Why is 144 Hz so relevant to the gaming community? We’ll put it this way – A typical LCD is a 60 Hz panel, meaning that it refreshes 60 times every second, and in comparison, a 144 Hz panel will refresh 144 times every second. So if you have a graphics card like the NVIDIA GTX 1080, you’ll find that even with high image quality settings in games, it can still pump out a large amount of frames per second itself.

Exactly how does this correlate? Well, if you have that GTX 1080 pushing an average of 150 FPS in a game and you’re using a 60 Hz panel, technically you won’t see a single difference between 60 FPS and 150 FPS, thus those extra frames are worthless. What ends up happening in the end is screen tearing and stuttering while the display tries to sync up with the GPU, which is highly undesirable. V-SYNC can correct this by limiting your frame rate (60 FPS for 60 Hz), but it can increase latency and still stutter. Now throw in a 144 Hz panel, you are wasting far less, will see minimal screen tearing, and you will absolutely be able to tell the difference in how smooth the game play is.

Now what about G-SYNC? G-SYNC actually works by matching the panel’s refresh rate (Hz) to your graphics card’s frame rate (FPS). So if you don’t hold a consistent 144 FPS with G-SYNC enabled, and say you jump between 95 and 130 FPS instead, your panel will now be refreshing at 95 to 130 times per second (Hz); the frame rate and refresh rate will be perfectly in sync. This will completely eliminate screen tearing and stuttering by drawing those frames immediately after they’re processed by the GPU.

I got a chance to try out this monitor with a Gigabyte GeForce GTX 1070 G1 Gaming (using the latest 368.81 NVIDIA drivers) in a few of the latest titles out there, including Grand Theft Auto V, Metro: Last Light Redux, Battlefield 4, Overwatch, The Witcher 3, and a few others. Having only experienced G-SYNC at CES 2014, I was happy to have a monitor with the capability and use it at my leisure. Enabling G-SYNC to rid of screen tearing and just smooth overall frame transitions was nothing short of beautiful. The GTX 1070 wont let me hit a full 144 FPS at 144 Hz without sacrificing image quality, so G-SYNC is a nice addition instead of using V-Sync or ULMB at 120Hz.

1440p gaming is becoming more and more relevant among gamers, and todays newest NVIDIA GeForce GTX series graphics cards will be able to handle it very well. We all know that the GTX 1080 is an absolute beast, the 1070 gives you 980 Ti performance and handles 1440p very well, and as we saw yesterday, the GTX 1060 can handle this resolution fairly well, too. If you’re an AMD user, even the latest budget Radeon RX 480 will be able to drive 1440p no problem, as will the Fury X, but don’t forget with this particular monitor you won’t be able to take advantage of G-SYNC with these cards.

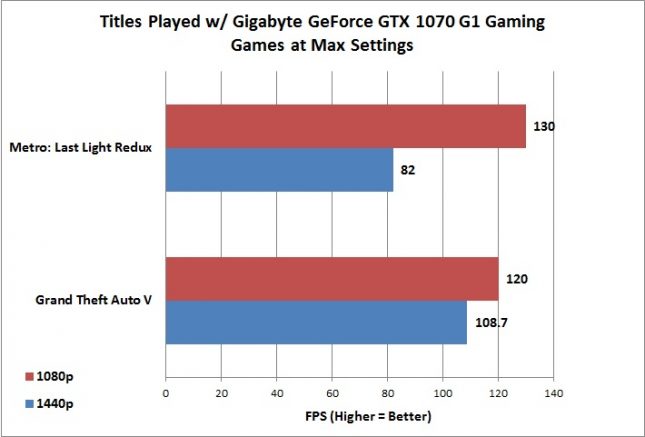

So what is the chart below showing us? Just for a quick comparison, I ran the GTA V and Metro: Last Light Redux benchmarks on my system to show how they ran in 1080p vs. 1440p. I wanted to know exactly how much the change in resolution would impact my gaming performance. In the end, the frame hit was pretty minimal to me, and what I gained was much nicer looking and higher resolution image. Things appear to be crisper and more pleasant to look at. Metro did have a pretty big difference between 1080p and 1440p, however game play was very smooth, chop free, and stutter free.

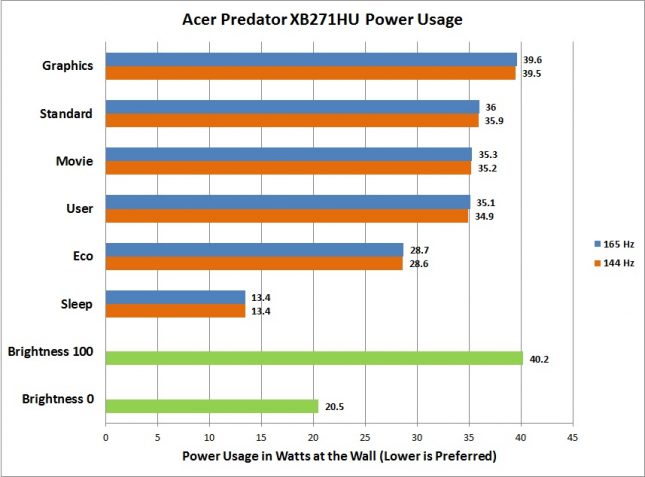

When measuring power consumption, I used my Kill A Watt P3 meter with the monitor connected directly to the wall and I also had the Legit Reviews homepage up in Google Chrome. While observing the XB1, I noted this panel topping out at 39.6 watts used under the Graphics mode. In comparison my own settings, with 75% brightness, resulted in 35.1 watts used and the other modes (except Eco) trended in the same power consumption. Eco mode allowed the monitor to consume 28.7 watts, while the monitor in Sleep mode used a staggering 13.4 watts. Increasing the refresh rate to 165 Hz, I saw that my setting’s (user) power consumption was increased by 0.2 watts and the others followed suit in the 0.1 to 0.2 watt increase, which is extremely minimal. Bumping the brightness to 100%, the power consumption increased by approximately 5 watts, while decreasing it to 0 decreased it by almost 15 watts. Note that the brightness results were pulled from the ‘User’ profile and not the others, as the others have their own predefined brightness and color settings.