NVIDIA GeForce GTX 1070 Ti Ethereum Mining Performance

Ethereum’s Byzantium Hard Fork is over and now the NVIDIA GeForce GTX 1070 Ti graphics card has been released. The NVIDIA GeForce GTX 1070 Ti is an interesting card for cryptocurrency fans as has 512 more CUDA cores than the GeForce GTX 1070 and still uses GDDR5 memory. When mining Ethereum the speed and latency of the memory subsystem is the most important piece of the silicon. That is why the mighty NVIDIA GeForce GTX 1080 with GDDR5X doesn’t perform as well as the lower priced GeForce GTX 1070 when it comes to mining Ether. The NVIDIA GeForce GTX 1070 Founders Edition runs $399 ($379 non-FE) and the new NVIDIA GeForce GTX 1070 Ti Founders Edition runs $449. Not bad considering the NVIDIA GeForce GTX 1080 FE runs $549 ($499 non-FE) and the NVIDIA GeForce GTX 1080 Ti FE runs $699.

Since we have all of these graphics cards we figured that we’d put the new NVIDIA GeForce GTX 1070 Ti with 2,432 CUDA Cores and 8GB of GDDR5 memory running at 8Gbps to work mining some Ethereum.

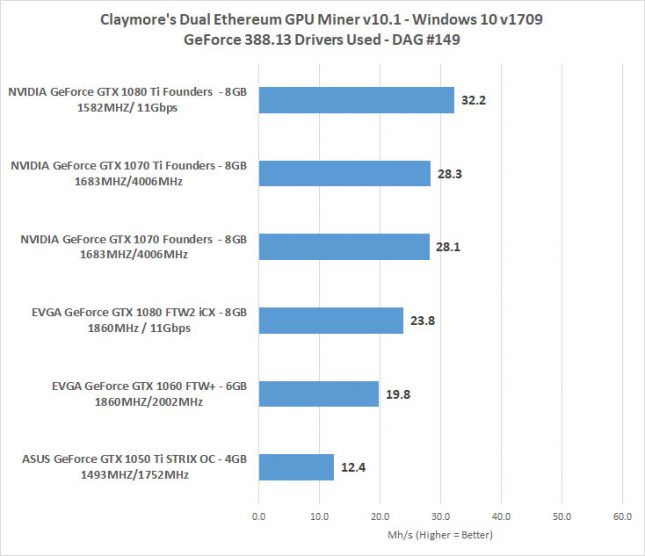

In stock form we found the GeForce GTX 1070 Ti was just 0.2 MH/s faster than the old GeForce GTX 1070. It looks like the extra CUDA cores don’t add up for more mining performance and the cards identical memory bandwidth (256 GB/sec due to the 8Gbps memory speed on a 256-bit wide memory interface) meant performance was the same.

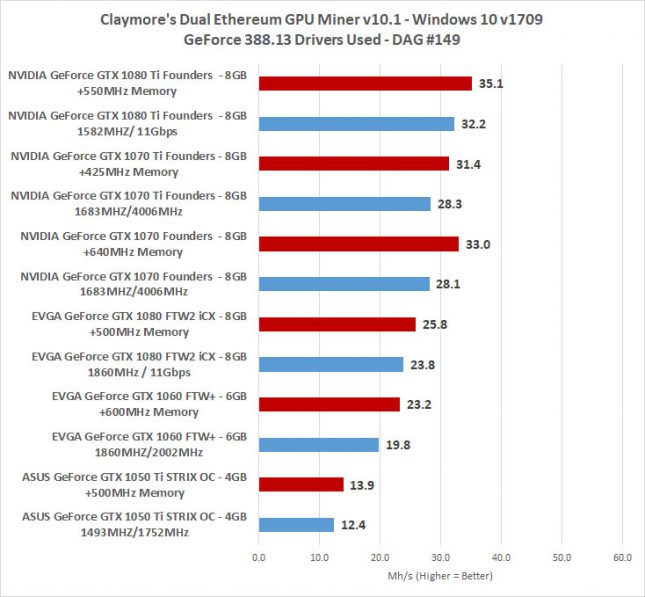

When we looked at the memory subsystem on the GeForce GTX 1070/1070 Ti cards we noticed that some were dropping down to 1900MHz when mining and others were remaining at 2000MHz. If we closed the mining application and opened other 3D applications the clocks jumped up to 2000MHz. The memory clocks shouldn’t drop like that and we’ve pointed out what we discovered with NVIDIA. They couldn’t duplicate what we were seeing, but we manually set the memory clocks on the cards running 1900MHz to get them properly set at 2000MHz for the charts above. Keep that in mind when overclocking as it takes a +200MHz overclock in EVGA Precision X to get the get the 100MHz increase shown in GPU-Z since its running at quad-rate speeds. We just wanted to point this out as it might be happening to others. The good news is manually overclocking the memory to the max will still get the highest clock possible from the card.

We also tossed on some overclocks onto the cards and as you can see overclocking really does help the hashrate one can get from each card. The NVIDIA GeForce GTX 1070 FE that we have was a beast and was able to overclock +640 MHz on the Samsung branded GDDR5 memory for 33.0 MH/s. The new NVIDIA GeForce GTX 1070 Ti FE that we had used Micron GDDR5 memory and could only overclock +425 MHz on the memory for 31.4 MH/s. We don’t have enough cards on hand to get into a Samsung versus Micron GDDR5 argument, but those are the results.

We also have an EVGA GeForce GTX 1070 Ti FTW2 graphics card at our disposal and ran it overnight for 8.5 hours with the memory overclocked as high as we could get it with full stability. We managed to drop the power target down to 60% and get the memory overclocked by +625MHz on the Micron branded GDDR5 memory (actual +425MHz due bug we already covered on our platform). This made our scores go from around 28.3 MH/s in stock form up to an average of 31.3 MH/s. The NVIDIA GeForce GTX 1070 Ti FE memory also got this high and was running 31.4 MH/s, so looks like that is around the score you’ll get on an overclocked 1070 Ti video card. This is slightly more than a 10% production increase and we’ll take it.

The EVGA GeForce GTX 1070 Ti FTW2 card had the GPU sitting at 65C with the memory and VRM temps at 65C after nearly 9 hours of mining on an open air bench. Not bad numbers and the fans were spinning at just 634 RPM, so this was nearly silent mining. Power consumption on the GeForce GTX 1070 Ti was respectable. Our Intel X99 workstation board is power hungry, so at idle the entire system was pulling 149 Watts of power. With the card in stock form we were getting 291 Watts. By lowering the power target down to 65% we dropped that down to 262 Watts a further reduction to 50% got that number down to 242 Watts, but it wasn’t stable. Both 1070 Ti cards get the same general performance, but the EVGA GeForce GTX 1070 TI FTW2 Gaming runs $499.99 shipped and the NVIDIA GeForce GTX 1070 Ti Founders Edition runs $449.99 shipped. For mining the blower style card for $50 less appears to be the easy choice.

At the end of the day the best bang for the buck remains with the NVIDIA GeForce GTX 1070 series. With a suggested retail price of $379 on board partner cards it still remains the best NVIDIA card for mining from our perspective. We got 33 MH/s from our overclocked GeForce GTX 1070 and we weren’t able to match that with the new new GeForce GTX 1070 Ti cards. The extra CUDA cores on the 1070 Ti don’t do anything significant for concurrency mining, so no need to pay extra for them! This is also good news for gamers as it should mean that the GeForce GTX 1070 Ti shouldn’t see inflated priced and be available to purchase.

Be sure to check out our articles on Ethereum mining:

- The Best GPU For Ethereum Mining NVIDIA and AMD Tested

- GeForce GTX 1070 Ethereum Mining Small Tweaks For Great Hashrate and Low Power

- Silent Ethereum Mining on EVGA GeForce GTX 1060 at 22 MH/s

- Ether Mining At Various DAG Sizes to see future Hashrates

- Ethereum Hahrate Performance Drop Might Be Coming – AMD & NVIDIA GPUs Tested

- AMD Radeon RX Vega 64 and Vega 56 Ethereum Mining Performance

- AMD’s New Mining Block Chain Optimized Driver Tested

- AMD Radeon RX Vega 64 Liquid Cooled – Ethereum Mining At 44 MH/s